(Continued post as can only tag 10 users at a time)

@Candy

Judge 1: In my top 14

Pros: Writeup is detailed and Well-organized. It’s always nice to see concrete efforts to optimize code, since these can generalize to wider swaths of the problem space. Potential Changes: Going to call out feature_importances work to make sure it addresses known issues with off-the-shelf techniques see [2305.10696] Unbiased Gradient Boosting Decision Tree with Unbiased Feature Importance. The “basic features” section appears to be missing details.

Judge 2: 5/7

Cool to see the multithreading runtime improvements. Fairly standard modelling. More focus on validation would improve the submission.

Judge 3: 3/5

Technically strong but lack of model evaluation metrics prevents a clear assessment of predictive performance, limiting practical confidence.

@uncharted

Judge 1: In my top 14

Pros: Demonstrates solid data science fundamentals. Very detailed writeup. 8 appreciate use of external dataset to help address unbalanced labeling. Approach emphasis cross-chain data, with justification for design choices. Tone feels authoritative and authentic. Nice insights from the graph data. Cool to see the value of the the bucketing approach and discussion of its performance. Potential Changes: 8.appreciate thorough and detailed reports, but this one might benefit from editing for conciseness and clarity. 8m kind of drowning in details at a certain point. Also going to request more details and/or reflection on the feature importances measurements, to address issues with off-the-shelf techniques [2305.10696] Unbiased Gradient Boosting Decision Tree with Unbiased Feature Importance

Judge 2: 6/7

Very well developed features and model. Especially nice to see the graph-based components. I applaud the effort to add more external sybil labels but I wonder if this helped or hurt the model performance given the prevalence of false sybils in many online lists.

Judge 3: 4/5

Great depth and rigor across all phases, and the write-up was pleasant to read. I loved how thoughtful it was in interpreting results and iterating on the approach

@ewohirojuso

Judge 1: Pros: Gives clear and thorough overview of process, particularly the data-cleaning and preprocessing steps. This may be helpful for

formatting or pre-cleaning data in the future.

Potential Changes: Generally, 8_would like to see more novelty or unique insight. This report is similar to others in technique and description, On a more granular fundamental level – there are concerns about setting all missing values to constant -1 – this shifts the distribution (for instance, lowering the mean and median) and introduces de facto unrealistic outliers. It would be good to know more about this design choice

Judge 2: 4/7

Solid approach that hits the basics well including cross validation and feature development. Nothing outside of the standard and would have been nice to see some performance metrics.

Judge 3: 3/5

Thoughtful preprocessing and label correction, but a bit too conventional and missing clear performance metrics.

@xingxiang

Judge 1: Pros: Clear overview of process. Appreciate the coherent design philosophy: “Convert complex blockchain data into feature vectors usable by machine learning” and “Automate model training and optimization processes to ensure optimal performance”.

Potential Changes: The actual prediction models (e.g. Random Forest, XGB, etc) being used in the ensemble are never discussed. This is an important piece of missing information. For imputing missing values, there are concerns about setting all missing values to constant -1 – this shifts the distribution (for instance, lowering the mean and median) and introduces de facto unrealistic outliers. It would be good to know more about this design choice. Would also be nice to have more representations of information (e.g. some images, tables etc) and a share of any insight gained from working on this.

Judge 2: 5/7

Clear and modular pipeline making good considerations of hyperparameters, cross validation, and class imbalance. Doesn’t try anything novel but does the basics well and has acceptable metrics

Judge 3: 3/5

A modular system with good engineering and strong performance, but the overall approach sticks to standard tabular ML without pushing creative boundaries.

@gespsy

Judge 1: In my top 14

Pros: Great writeup that is thorough and structured, without feeling formulaic. Design choices are justified. Insight is provided through discussion of less-performant techniques. Appreciate the visuals that help explain correlation in the original data, as well as the optimization approach.

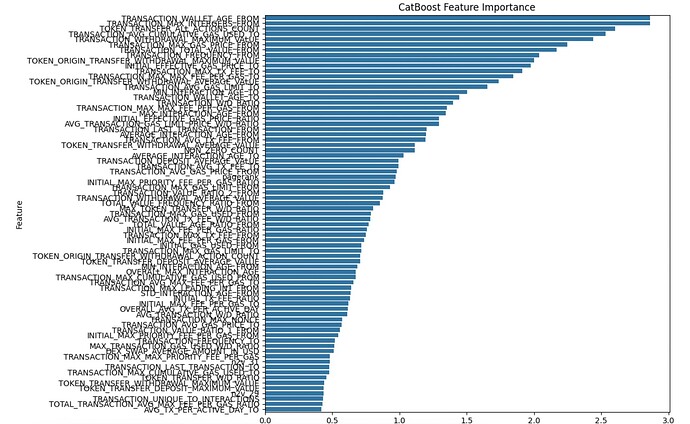

Potential Changes: Would be nice to see more discussion of which features offered insight, beyond the feature importances. Off-the-shelf techniques for this are known to have issues. [2305.10696] Unbiased Gradient Boosting Decision Tree with Unbiased Feature Importance

Judge 2: 5/7

Solid execution but the approach sticks to standard approaches without new insights or deep performance gains

Judge 3: 3/5

Solid technically and methodically sound. However, it sticks to conventional approaches without exploring more innovative angles or deeper behavioral patterns

@mahboobbiswas

Judge 1: Pros: The modeling steps are well-explained. 8.appreciate the granularity of the optimization…Useful insight into the data is provided, e.g. the cleanliness/usability of the data based on blockchain.

Potential Changes: There are some jarring transitions/inclusions in the writeup, that look copy/pasted from the original instructions. Would like to see feature importances cross-validated somehow, since there are known weaknesses in off-the-shelf techniques. While many of the performance metrics look good – nearly 15% of the positive-label predictions are false, 8_would&view as an issue to address.

Judge 2: 6/7

I like how this reads like a notebook tutorial. Could be a great guide for newcomers and achieves solid performance.

Judge 3: 4/5

Great depth and thoroughness! Covered every stage of the pipeline, from data prep to advanced evaluation, and explored aspects like dynamic thresholding and graph-based features.

@alertcat

Judge 1: In my top 14

Pros: GPU-accelerated approach offers technical novelty and potential optimization. 8 personally enjoy the esthetics of the visuals. The verbal descriptions of the features (e.g. “network interaction depth”) are clear and helpful.

Potential Changes: Since GPU acceleration is one of the primary focuses, it would be great to understand how it improved performance (either in prediction accuracy or efficiency). Some of the graphics have formatting/detail issues. For instance, one image says it compares feature importance for two different models – but only one of the models is shown in the chart.

Judge 2: 5/7

Cool to see RAPIDS being used. Besides that the approach is fairly standard and well executed.

Judge 3: 4/5

Detailed, structured, and comprehensive write-up that clearly communicates the methodology and findings. Demonstrated great rigor from problem framing to technical implementation and insights.

@aabo0090

Judge 1: In my top 14

Pros:

The model and data cleaning pipeline seem well-engineered. It’s nice to see Logistic Regression, which offers high degree of interpretability. Really nice to see the “Future Work” being aware of the potential for graph/network data.

Potential Changes: The writeup is a bit vague and bland. Would like to see more detail about key features in the documentation (not just referencing to code). There are unique considerations for discussion in Production Consideration (such as drift detection) – but it would be great to see more detail/evidence for the claims

Judge 2: 4/7

Clear and methodical approach with an interesting ensemble..

Judge 3: 3/5

A solid, production focused work with excellent operational thinking, but lacking quantitative metrics reduces confidence in its effectiveness.

@Limonada

Judge 1: In my top 6

Pros: Writeup is clear and descriptive. Offers insight beyond standard/tutorial level, with design choices well-justified. Experimented with a wide variety of techniques, rather than just jumping on popular ones. Nice engineering/design to incorporate the multiple models into a meta-model.

Potential Changes: With performance this getting, overfitting is always a concern. Would be helpful to have sanity checks and/or some degree of interpretability

Judge 2: 4/7

Write-up is light on feature details but the author pays attention to class imbalance, cross validation, and building a good meta model that achieves high performance.

Judge 3: 3/5

Great but standard implementation. I liked the consideration given to handling class imbalance, but the work lacked deeper analysis or exploration of innovative features

@davidgasquez

Judge 1: In my top 3

Pros: Very strong writeup; appreciate both the conversational tone and the resources list. It’s great to see well-researched design, with acknowledgment of prior work in the field. 8_really_appreciate the willingness to experiment with new approaches, and the completely-appropriate-for-sybils graph-based approach of node2vec in particular. The graphics are wonderful. Thanks for the explanation of the exploratory process, incorporating revisions and offering insight rather than just giving a final product.

Potential Changes: Not so much changes to existing work, as excited discussion of potential future. Would be awesome to see some of the ideas towards the end of te post, explored more in the future. Since the model performance is so strong, it would be interesting to see what the misclassifications are – thinking in terms of a potential adversarial generator.

Judge 2: 6.5/7

Awesome write up and modeling work. Love the inclusion of graph features, especially community-based ones. Crystal clear thought process

Judge 3: 5/5

One of the best submissions. A well-executed approach with great use of graph features, external resources.. Great write-up as well